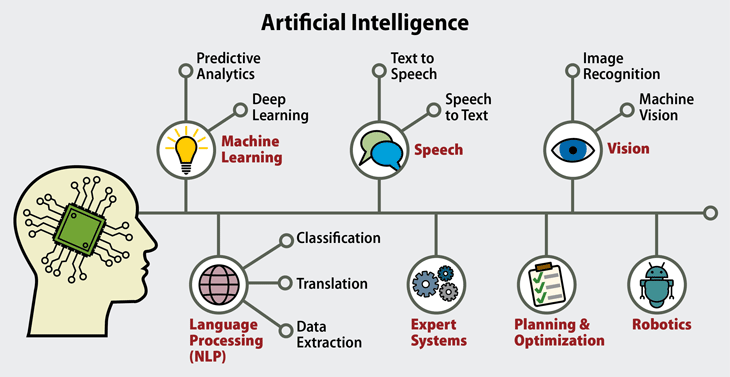

What is Artificial Intelligence (AI)?

AI, refer to the ability of machines to surpass human intelligence in performing tasks that typically require cognitive abilities like learning, reasoning, problem-solving, and decision-making.

Why artificial intelligence needs?

Here’s a concise overview of why AI is crucial.

Enhanced Efficiency and Productivity

AI automates repetitive tasks, freeing up human time and resources for more complex endeavors. Imagine AI-powered robots handling assembly lines in factories or chatbots answering customer queries 24/7, boosting productivity and streamlining operations.

Improved Decision-Making

AI can analyze vast amounts of data to identify patterns and trends that humans might miss, leading to better-informed decisions. This is invaluable in fields like healthcare, finance, and business, where data-driven insights can optimize strategies and improve outcomes.

Scientific Breakthroughs

AI is accelerating scientific progress by simulating complex systems, analyzing medical images, and even designing new materials. This has led to breakthroughs in drug discovery, personalized medicine, and understanding the universe.

Personalized Experiences

AI tailors services and products to individual preferences, from recommending movies you’ll love to predicting your traffic route to avoid congestion. This personalization enhances user experience and fosters deeper engagement.

What is intelligence?

From witty banter to solving complex problems, intelligence is the spark that defines human brilliance. Yet, even the most intricate insect behavior rarely earns the same title. Why? The secret lies in adaptability. While a wasp may meticulously stash food, her rigid routine crumbles upon a simple change. True intelligence, unlike instinct, bends to new challenges, learns from experience, and rewrites the script. So, the next time you marvel at human ingenuity, remember: intelligence isn’t just grand ideas, it’s the ability to adapt and thrive in life’s ever-changing stage. Research in AI has focused chiefly on the following components of intelligence: Learning, Reasoning and Logic, Perception, Decision Making, Problem Solving and Language.

Learning

Imagine an AI that improves with experience, just like us. That’s the power of machine learning. AI algorithms analyze mountains of data, identify patterns, and adapt their behavior, enabling them to learn new tasks, recognize objects, and even make predictions.

Reasoning and Logic

AI isn’t just about crunching numbers; it can also reason and make logical deductions. This allows AI systems to solve complex problems, analyze situations, and even play strategic games like chess at superhuman levels.

Perception

The world around us is full of sights and sounds, and AI can interpret them too. Computer vision allows machines to “see” and understand images and videos, while natural language processing helps them comprehend spoken and written language.

Decision Making

With all this information at its disposal, AI needs to make informed decisions. This is where decision-making algorithms come in, weighing different options and choosing the optimal path based on the data and goals at hand.

Problem Solving

Humans excel at tackling challenges, and so can AI. Problem-solving algorithms analyze situations, identify potential solutions, and test them virtually, leading to innovative approaches and optimized outcomes.

Language

Humans communicate and process information through language, and AI is following suit. Natural language processing (NLP) allows machines to understand, generate, and manipulate human language. This opens up a vast range of possibilities, from chatbots that hold conversations to AI systems that analyze textual data and translate languages.

Methods and goals in AI

Symbolic vs. connectionist approaches

Artificial intelligence (AI) is revolutionizing our world, but how does it actually work? Understanding the different approaches to building AI intelligence is crucial for grasping its potential and limitations. Two key methods dominate the landscape: the top-down and bottom-up approaches.

Top-Down – The Symbolic Masters

Imagine building an AI letter recognizer from scratch. The top-down approach would involve analyzing letters mathematically, defining precise geometric descriptions for each shape. Algorithms would then compare scanned images to these descriptions, identifying individual letters. Think of it as teaching a robot with a rulebook: “Identify five horizontal lines for an ‘E,’ two circles for an ‘O.'”

This approach, championed by pioneers like Allen Newell and Herbert Simon, emphasizes symbolic manipulation and logic. It excels in structured environments with defined rules, like chess or playing Go. However, the real world presents messy, ambiguous situations where rigid rules falter.

Bottom-Up – Mimicking the Brain’s Dance

Instead of pre-programmed rules, the bottom-up approach takes inspiration from the human brain. Imagine training an AI with an optical scanner by showing it thousands of letters. An intricate web of simulated neurons, connected and weighted like biological networks, would adjust its responses based on its “learning.” Gradually, the network would recognize patterns and identify letters without explicit instructions.

This neural network approach, championed by scientists like Edward Thorndike and Donald Hebb, aims to replicate the brain’s learning process. It shows promise in tackling complex, real-world challenges like image recognition and natural language processing. However, building truly complex neural networks that mimic the brain’s intricate workings remains a significant challenge.

The Balancing Act – Symbiosis or Standoff?

The top-down and bottom-up approaches aren’t mutually exclusive. Researchers today often combine elements of both, leveraging the strengths of each. Top-down rules can guide and shape the learning process of bottom-up networks, while neural networks can handle the messy nuances of real-world data that symbolic logic struggles with.

Challenges and the Road Ahead

Both approaches face their own hurdles. Symbolic systems often lack the flexibility to adapt to the real world, while replicating the brain’s complexity in bottom-up networks remains a daunting task. Even mimicking simple organisms like a worm with 300 neurons has proven remarkably difficult for current AI.

Despite these challenges, the quest to understand and build artificial intelligence continues. By continuing to develop and refine both top-down and bottom-up approaches, we inch closer to unlocking the true potential of AI for tackling complex problems and enriching our lives.

The Three Faces of AI – Artificial general intelligence (AGI), Applied Intelligence, and Cognitive Simulation

Artificial intelligence (AI) isn’t a monolith; it’s a multifaceted endeavor with diverse goals and approaches. Understanding these key goals is crucial for grasping the potential and limitations of this rapidly evolving field. Buckle up, as we explore the three main branches of AI research:

Artificial General Intelligence (AGI) – The Quest for a Thinking Machine

Imagine a machine so intelligent it rivals human cognition – that’s the ambitious dream of AGI, also known as strong AI. This branch seeks to create machines that not only excel at specific tasks but possess a broad, adaptable intelligence resembling our own. While promising, the path to AGI is fraught with challenges. Progress has been limited, and some critics even doubt its feasibility within the foreseeable future. Despite the skepticism, the allure of a truly “thinking machine” continues to drive research in this fascinating realm.

Applied AI – Smart Systems for the Real World

Not all AI dreams are about replicating human minds. Applied AI, alternatively called advanced information processing, focuses on practical applications. This branch aims to develop commercially viable “smart” systems that tackle real-world problems. From expert medical diagnosis tools to sophisticated stock-trading algorithms, applied AI has already achieved significant success. Its tangible benefits in various industries underscore its growing importance in today’s world.

Cognitive Simulation – Deciphering the Mind through Machines

The human mind remains a mysterious puzzle, but AI can help us unravel its secrets. Cognitive simulation leverages computers to test and refine theories about how we think, perceive, and remember. Researchers use these computer models to simulate processes like facial recognition, memory recall, and decision-making, providing valuable insights into the workings of the human mind. This branch transcends pure technological goals, contributing to scientific advancements in fields like neuroscience and cognitive psychology.

Cracking the Code of Intelligence – Alan Turing and the Birth of AI

The Enigma Awakens

In the shadows of World War II, a brilliant mind was at work. Alan Turing, the logician and codebreaker extraordinaire, wasn’t just cracking enemy ciphers; he was cracking the code of intelligence itself. In the mid-1930s, while others dreamt of tanks and battleships, Turing envisioned a machine capable of thought, a revolutionary idea that laid the foundation for the field we know today as Artificial Intelligence (AI).

The Universal Architect

Enter the Turing Machine, a theoretical marvel conceived in 1936. This abstract brainchild, with its limitless memory and symbol-manipulating scanner, could tackle any conceivable problem, given enough time and resources. It wasn’t just about calculations; it was about a machine operating on, and even modifying, its own instructions – a radical notion that planted the seeds of machine learning and laid the groundwork for the computers we use today.

From Bletchley Park to Brainstorming

While deciphering Nazi secrets at Bletchley Park, Turing’s mind wouldn’t be confined by wartime urgency. He dreamt of machines learning from experience, shaping their own paths through heuristic problem solving – an idea decades ahead of its time. He envisioned neural networks of artificial neurons, capable of adapting and evolving, concepts that would echo in the future field of connectionism.

A Public Spark in the Shadows

In 1947, Turing publicly ignited the AI conversation with a simple, yet profound, statement: “What we want is a machine that can learn from experience.” His 1948 report, “Intelligent Machinery,” introduced groundbreaking concepts, from reinforcement learning to genetic algorithms, but sadly remained unpublished, with many of his ideas rediscovered years later.

Turing’s Legacy – Beyond the Enigma

Though Turing’s life ended tragically and his contributions went unrecognized for years, his impact on AI is undeniable. He was the visionary architect who set the stage for an entire field, the codebreaker who cracked the code of intelligence itself. His theoretical work continues to inspire researchers and shape the landscape of AI – a testament to a mind that dared to dream beyond the limitations of its time.

Exploring AI Through Chess and the Turing Test

Turing’s Chess Vision Comes Alive!

Imagine a machine besting the world’s chess champion. In 1945, Alan Turing predicted this seemingly impossible feat. Fast forward to 1997, and Deep Blue, an IBM chess computer, made Turing’s prophecy a reality, dethroning the reigning champion. While a triumph for AI, did chess-playing robots offer insights into human thought, as Turing hoped? The answer, surprisingly, is “Not quite.”

Beyond Brute Force – The Limits of Brute Power in Chess AI

Turing understood that exhaustive searches through every possible move wouldn’t work. He envisioned using heuristics to guide the search toward more promising paths. However, the true key to Deep Blue’s success wasn’t clever algorithms, but raw computing power. Its 256 processors churned through 200 million moves per second, leaving human minds and Turing’s heuristic approaches in the dust.

Noam Chomsky’s Bulldozer Analogy – Is Winning Everything?

Some, like renowned linguist Noam Chomsky, argue that a bulldozer winning weightlifting isn’t much of a feat. Similarly, Deep Blue’s victory showcases computational prowess, not necessarily intelligence. Its brute force approach doesn’t tell us much about how humans navigate the complexities of chess, a nuanced blend of strategy, intuition, and psychology.

The Turing Test – Can Machines Mimic the Mind?

To address the ambiguity of “intelligence,” Turing proposed his groundbreaking test in 1950. Imagine a blindfolded interrogator conversing with a human and a computer, both hidden. If the interrogator can’t reliably distinguish between them, the computer “passes” the test, implying a level of intelligence.

The Quest for Turing Triumph – Is ChatGPT the Answer?

The quest to pass the Turing test continues. In 2022, the large language model ChatGPT sparked debate with its seemingly human-like conversations. Some, like Buzzfeed data scientist Max Woolf, declared it a Turing test victor. However, others argue that ChatGPT’s explicit self-identification as a language model undermines its claim.

The Road Ahead – Beyond Checkmate and Conversation

The journey towards truly intelligent machines continues. While chess serves as a fascinating testbed for AI advancement, the Turing test, with its limitations, may not be the ultimate arbiter of machine intelligence. Perhaps focusing on understanding and replicating the nuanced abilities of the human mind, rather than aiming for mimicry or brute force, will be the key to unlocking the true potential of AI.

Early Milestones in AI – Shaping the Future of Intelligent Systems (1951-1962)

Revolutionizing Checkers – Christopher Strachey’s AI Breakthrough (1951-1952)

Delve into the origins of artificial intelligence as we explore the groundbreaking work of Christopher Strachey. In 1951, Strachey, the future director of the Programming Research Group at the University of Oxford, crafted the earliest successful AI program. Operating on the Ferranti Mark I computer in 1952, this checkers program showcased its prowess by playing complete games at a commendable speed, laying the groundwork for the AI landscape.

Machine Learning Unveiled – Shopper’s Intelligent Exploration (1952)

In 1952, Anthony Oettinger at the University of Cambridge unveiled Shopper, a pivotal moment in the early history of AI. Running on the EDSAC computer, Shopper simulated a mall with eight shops, demonstrating a novel approach to machine learning. By searching for items at random, memorizing stocked items, and applying rote learning principles, Shopper showcased early intelligent decision-making, providing a glimpse into the future of AI.

U.S. Breakthrough – Arthur Samuel’s Checkers Revolution (1952-1962)

Crossing the Atlantic, AI reached new heights in the United States through the work of Arthur Samuel. In 1952, Samuel developed the first AI program for the IBM 701—a checkers program that evolved significantly over the years. By 1955, Samuel introduced features enabling the program to learn from experience, incorporating rote learning and generalization. The culmination was a historic victory in 1962 against a former Connecticut checkers champion, solidifying its place in the annals of AI history. Explore the journey of AI from its inception to transformative victories.

Evolutionary Computing – A Pioneer Path to AI Advancements

From Checkers Champions to Neural Networks – Early Steps in Evolving Intelligence

Samuel’s groundbreaking checkers program was more than just a game-winner; it was the spark that ignited evolutionary computing. His program iteratively improved by competing against itself, a method later formalized as “genetic algorithms.” This laid the foundation for a new approach to AI, where solutions evolve and adapt through generations.

John Holland – Architecting a Multiprocessor Future for AI

John Holland, a key figure in evolutionary computing, envisioned a future where processing power would unlock new avenues for AI. His 1959 dissertation proposed multiprocessor computers, assigning individual processors to artificial neurons in a network. This vision later materialized in the 1980s with powerful supercomputers like the Thinking Machines Corporation’s 65,536-processor machine.

Beyond theory, Holland’s Michigan lab developed practical applications using genetic algorithms. From chess-playing programs to simulations of single-cell organisms, these systems showcased the versatility of this evolutionary approach. Even real-world crime investigations benefited, with AI-assisted witness interviews generating offender profiles.

Logical Reasoning – Cornerstone of AI Intelligence

Logical reasoning lies at the heart of intelligent thought, and AI research has long tackled this challenge. A landmark achievement came in 1955-56 with the Logic Theorist program, which elegantly proved theorems from Principia Mathematica.

The General Problem Solver (GPS) by Newell, Simon, and Shaw represented another stride. Launched in 1957, GPS solved diverse puzzles through trial and error, demonstrating impressive capabilities. However, critiques emerged—GPS and similar programs lacked true learning, relying solely on pre-programmed knowledge. This marked a crucial turning point in the pursuit of genuine, adaptable AI intelligence.

Early AI Conversations: Mimicking Intelligence with Eliza and Parry

The 1960s birthed two AI programs that sparked fascination with conversations simulating human intelligence: Eliza and Parry.

Eliza – The Therapeutic AI

Created by Joseph Weizenbaum, Eliza emulated a Rogerian therapist, reflecting and rephrasing user statements. While not truly understanding the conversation, Eliza generated an illusion of empathy, engaging in seemingly meaningful dialogue.

Parry – The Delusional AI

Developed by Kenneth Colby, Parry mimicked a paranoid personality, weaving pre-programmed responses into conversation. Psychiatrists often found it difficult to distinguish Parry from a real paranoiac, highlighting the program’s effectiveness in replicating human-like behavior.

Limitations of Early AI Dialogues

It’s crucial to remember that both Eliza and Parry relied heavily on pre-programmed responses and lacked true understanding. They offered an innovative glimpse into human-computer interaction, but far from achieving genuine intelligence.

Building the Foundation – AI Programming Languages

Specialized languages fueled the advancement of AI research.

IPL – The Pioneering Language

Newell, Simon, and Shaw developed IPL (Information Processing Language) while working on early AI programs. IPL’s strength lay in its “list” data structure, enabling complex branching and logical manipulation.

LISP – The Dominant Force

John McCarthy took the baton further, merging IPL elements with the lambda calculus to create LISP (List Processor) in 1960. LISP became the dominant language for AI research in the US for decades, shaping the early landscape of the field.

PROLOG – A European Perspective

PROLOG (Programmation en Logique) emerged in 1973 from Alain Colmerauer and later gained refinement by Robert Kowalski. This language focused on logical reasoning, utilizing a powerful theorem-proving technique called resolution. PROLOG gained significant traction in AI research, particularly in Europe and Japan.

A Global Collaboration

The development of these early AI programming languages underscores the collaborative nature of AI research, with influences and contributions spanning Europe, the US, and beyond. These innovative languages laid the groundwork for future advancements, paving the way for the diverse and sophisticated AI systems we see today.

Microworlds: Training Grounds for Tiny AIs

Ever wonder how scientists unlock the secrets of intelligence

Sometimes, it’s by taking a step back and building a simpler world — a miniature playground for AI to learn and grow. This is the magic of Microworlds!

Think Lego blocks meet logic circuits

Microworlds are controlled environments, like a virtual room filled with colorful shapes or a tiny robot exploring a table. Here, researchers can focus on specific skills like grasping objects, understanding language, or solving puzzles without getting bogged down by the messy complexities of the real world.

Meet SHRDLU, the Block-Stacking Champ!

One famous Microworld star is SHRDLU, a robot arm from the 1970s that could follow instructions like “pick up the blue pyramid” and even answer questions about its actions. Imagine telling your Alexa to build a castle out of Legos, and it actually does it – that’s kind of what SHRDLU was about!

But Microworlds Have Limits

While SHRDLU impressed everyone, it turned out to be a dead end. The tricks that worked in its tiny block world didn’t translate well to the messy, unpredictable world outside. It was like teaching a kid to add using only colored beads — they understand the rules, but it doesn’t mean they can suddenly do calculus.

Microworlds are Stepping Stones, Not Solutions

Although not the ultimate answer, Microworlds played a crucial role in AI’s journey. They taught us how to train AIs in controlled environments, break down complex tasks into smaller steps, and test new ideas without risking real-world disasters. Today, even though AIs are tackling grand challenges like driving cars and composing music, the lessons learned in these tiny virtual worlds still resonate.

Shakey – A Microworld Marvel (But Was It Fast Enough?)

Shaking up the AI scene in the late 1960s and early 1970s, Shakey wasn’t your average robot. Born from the Stanford Research Institute and the minds of Bertram Raphael, Nils Nilsson, and others, Shakey ventured into the exciting realm of microworlds.

Forget roaming city streets; Shakey’s playground was a specially built mini-universe complete with walls, doorways, and basic wooden blocks. This controlled environment, a hallmark of the microworld approach, allowed researchers to focus on specific challenges like navigation, obstacle avoidance, and object manipulation.

Think of it as AI training wheels. Shakey could master basic “tricks” like TURN, PUSH, and CLIMB-RAMP, paving the way for future robots to tackle more complex tasks.

But it wasn’t all smooth sailing. Critics pointed out the limitations of Shakey’s miniature world. Unlike humans effortlessly navigating our messy reality, Shakey’s every action seemed painfully slow. What you could do in minutes took Shakey days – not exactly “agile” by today’s standards.

While Shakey’s speed left something to be desired, its contribution to AI research couldn’t be ignored. It proved the microworld approach’s potential for training and honing specific skills in controlled environments. This laid the groundwork for a revolutionary type of program: the expert system, which we’ll dive into next.

Expert Systems: Unlocking Microworld Precision Powerhouse

Harnessing the Microworld for Expert Performance

Dive into the world of expert systems, miniature AI marvels housed within specific “microworlds” like a doctor’s diagnosis realm or a chemist’s lab. These systems, mimicking the knowledge of human experts, tackle real-world problems with exceptional precision. Think medical diagnosis that rivals top doctors or financial forecasting that outperforms seasoned analysts – that’s the power of expert systems.

Knowledge & Inference – The Engine Room

Every expert system boasts two key components: a knowledge base (KB) and an inference engine. The KB stores all the crucial information for a specific domain, meticulously gathered from experts and organized into “if-then” rules. Think of it as the system’s brain, packed with expert knowledge.

The inference engine acts as the system’s muscle, wielding the KB’s knowledge to reach conclusions. It navigates the rules, drawing connections and deductions like a miniature Sherlock Holmes. If the KB tells it “symptom A leads to disease B” and “fever is symptom A,” the engine swiftly infers “the patient likely has disease B.”

Fuzzy Logic – Embracing the Shades of Gray

Not all problems fit neatly into true or false boxes. That’s where fuzzy logic steps in. This clever approach embraces the ambiguity inherent in many situations, allowing the system to handle vague terms like “somewhat elevated” or “slightly cloudy.” By incorporating fuzzy logic, expert systems can tackle real-world complexities with greater nuance and accuracy.

DENDRAL – The AI Chemist Leading the Way

Meet DENDRAL, a pioneering expert system born in the 1960s. Armed with a knowledge base of chemical reactions and spectral data, DENDRAL became a master of molecular analysis. Imagine, an AI decoding complex chemical structures faster and more accurately than seasoned chemists – that’s the impact of DENDRAL.

MYCIN – Diagnosing Infections with AI Smarts

Early Diagnosis, Expert Insight

Enter MYCIN, the pioneering expert system from 1972 that tackled blood infections like a digital doctor. This AI wonder analyzed symptoms, test results, and even requested additional information to offer reliable diagnoses and treatment recommendations. Think of it as having an expert doctor on call 24/7, providing personalized care powered by AI.

Harnessing the Power of Rules

MYCIN’s magic lay in its 500+ “production rules,” each a miniature expert opinion codified in code. These rules covered various symptoms, tests, and treatments, allowing MYCIN to navigate complex medical scenarios with impressive accuracy. Its performance matched blood infection specialists and surpassed general practitioners, a testament to the power of well-designed AI systems.

Limitations of Expertise

However, even expert systems like MYCIN have their blind spots. They lack common sense and awareness of their own limitations. Imagine MYCIN, faced with a gunshot wound bleeding victim, searching for bacteria instead of recognizing the obvious cause. Similarly, clerical errors in data could lead to absurd recommendations, highlighting the need for careful user validation and error-checking.

CYC

The Commonsense Colossus

Take CYC, another ambitious AI project that aimed to capture the entire realm of human common sense. Think of it as a vast encyclopedia of everyday knowledge, encompassing millions of “commonsense assertions” woven into intricate relationships. The goal? Enable CYC to draw inferences and reason beyond its programmed rules, similar to how humans use their natural understanding of the world.

Beyond Basic Rules

With its commonsense arsenal, CYC could perform impressive feats. Imagine inferring that someone finishing a marathon is wet, not because it’s explicitly programmed, but by understanding the connection between exertion, sweating, and wetness. This ability to think deeper opens doors for future AI systems capable of more nuanced and flexible reasoning.

Challenges and the Frame Problem

However, vast knowledge comes with its own hurdles. Searching and navigating this ocean of information efficiently remains a challenge. Imagine sifting through millions of rules to find the most relevant ones for a specific problem – that’s the frame problem in action. Some critics even believe it’s an insurmountable obstacle for symbolic AI like CYC, forever barring the path to truly intelligent systems.

The Future of Expert Systems

Will CYC crack the frame problem and achieve human-level knowledge? Only time will tell. But its ambition and successes, along with MYCIN’s early achievements, demonstrate the immense potential of expert systems for diagnosis, reasoning, and decision-making in various fields. As AI research continues to evolve, these early pioneers pave the way for even more impressive advancements, bringing us closer to machines that can truly think and understand like humans.

Connectionism and Neural Networks

Unlocking the Brain’s Secrets

Connectionism, also known as neuronlike computing, delves deep into the fascinating world of the human brain. It seeks to understand how we learn, remember, and process information not through rigid logic, but through the intricate dance of interconnected neurons. This approach sparked in the 1940s, driven by a powerful question: Can the brain’s remarkable abilities be explained by the principles of computing machines?

Pioneers of Neural Networks

Warren McCulloch and Walter Pitts, a neurophysiologist and a mathematician respectively, became the first architects of this new paradigm. In their groundbreaking 1943 treatise, they proposed a radical idea: each neuron in the brain acts as a tiny digital processor, collaborating with its neighbors through a web of connections. This network-centric view challenged the dominant logic-based models of intelligence, suggesting a more dynamic and distributed approach to understanding the mind.

Beyond Turing Machines

As McCulloch himself later quipped, they aimed to treat the brain “as a Turing machine,” referring to the theoretical model of computation. However, connectionism went beyond simply replicating existing models. It emphasized the brain’s unique ability to learn and adapt through the modification of these connections, a feature absent in most Turing machines.

Building an Artificial Brain – The First Neural Networks Take Flight

From Brains to Bytes

Imagine recreating the human brain’s learning and recognition skills within a machine. That’s the ambitious realm of neural networks, and it all started in 1954 with Belmont Farley and Wesley Clark from MIT. Armed with limited computer memory, they built the first-ever functional artificial neural network with a modest 128 neurons.

Simple Patterns, Big Potential

Though basic, Farley and Clark’s network could recognize simple patterns, proving the viability of the connectionist approach. Even more exciting was their discovery: even randomly removing up to 10% of the neurons didn’t significantly affect the network’s performance. This mirrored the brain’s remarkable resilience to damage, hinting at the potential for robust and adaptable AI systems.

Demystifying the Network

Let’s dive into the mechanics:

- Input & Output: Imagine five neurons – four for input (detecting patterns) and one for output (the network’s response). Each neuron is either “firing” (active) or “not firing” (inactive).

- Weighted Connections: Each connection between neurons has a “weight,” influencing the output neuron’s behavior. Think of it as the strength of the signal passing between them.

- Firing Threshold: The output neuron has a set “firing threshold.” If the sum of weighted input signals from active neurons reaches or exceeds this threshold, the output neuron fires, triggering a specific response.

Training the Network

Now, how does this network learn? It’s a simple two-step dance:

- Feed the Network: Introduce a pattern (like a specific shape) and observe the output.

- Adjust the Weights: If the output matches the desired outcome (e.g., recognizing the shape), strengthen the connections that contributed to it. Conversely, if the output is wrong, weaken those connections.

This training process, repeated with various patterns, gradually “sculpts” the network’s weights, enabling it to correctly respond to each pattern eventually. The remarkable aspect? This learning is purely mechanical, driven by algorithms without any human intervention.

Perceptrons – The Birth of Artificial Minds

Enter the Perceptron

In 1957, Frank Rosenblatt, a charismatic pioneer at Cornell University, embarked on a revolutionary quest: building artificial brains. He called them perceptrons, laying the groundwork for the field of artificial neural networks (ANNs) as we know it today.

Beyond Simple Patterns

Rosenblatt went beyond the two-layer networks of Farley and Clark. He envisioned multilayer networks capable of learning complex patterns and solving intricate problems. His key contribution? Generalized back-propagating error correction, a training method that allowed these networks to learn from their mistakes, a crucial step towards intelligent machines.

From Visionary to Movement

Rosenblatt’s work wasn’t just technical brilliance; it was a spark. His infectious enthusiasm ignited a wave of research across the US, with numerous teams delving into the fascinating world of perceptrons. They embraced the term connectionism, coined by Rosenblatt, to highlight the crucial role of connections between neurons in the learning process. This term, adopted by modern researchers, continues to define the field.

Legacy of Innovation

Rosenblatt’s trailblazing work with perceptrons and back-propagation laid the foundation for the explosive growth of ANNs in recent years. His ideas, refined and expanded by generations of researchers, have powered groundbreaking advancements in fields like computer vision, natural language processing, and robotics.

AI Cracks the Code – Learning Verb Tenses Without Rules

Imagine a computer mastering English grammar, not through pre-programmed rules, but by learning like a child. Sounds far-fetched? Not according to a groundbreaking experiment that used connectionism, a revolutionary approach to artificial intelligence.

Meet the Verb Conjugator

In 1986, David Rumelhart and James McClelland at UC San Diego created a neural network of 920 artificial neurons, mimicking the brain’s structure. This network’s task? Conquering the notoriously tricky world of past tense verb conjugation.

Learning by Example

Forget rulebooks and drills. This network learned by feasting on verb “root forms” like “come,” “look,” and “sleep” fed into its input layer. A helpful “supervisor program” compared the network’s guesses (“came” versus the desired “come”) and subtly adjusted the connections between neurons, nudging it towards the correct answer.

From Familiar to Unfamiliar

This process, repeated for 400 verbs over 200 rounds, did the impossible. Not only did the network master the original verbs, but it also generalized its knowledge to form past tenses of unfamiliar verbs it had never seen before! “Guard” became “guarded,” “weep” transformed into “wept,” and “cling” found its past in “clung.” Even intricate rules like double consonants in “drip” were picked up effortlessly. This was learning through analogy, similar to how humans master language.

Beyond Rules, a Network of Knowledge

This feat, known as “generalization”, highlights the power of connectionism. Unlike rule-based AI, information isn’t stored in specific locations but distributed across the network’s web of connections. The know-how to form “wept” from “weep”, for example, doesn’t reside in one neuron, but in the intricate dance of weighted connections formed through training. This echoes the human brain’s seemingly magical ability to store and retrieve information in a holistic, interconnected way.

Unlocking the Mind’s Secrets

This verb-conjugating AI marks a crucial step in understanding how human brains learn and process language. By studying connectionism, researchers inch closer to unraveling the mysteries of our own minds and building truly intelligent machines that can not only follow rules but also learn and adapt like humans.

The Many Facets of Neural Networks – Conjugation

While the verb-conjugating experiment showcases the incredible learning abilities of connectionism, its applications extend far beyond grammar lessons. Let’s dive into the diverse world of neural networks and witness their transformative power in various fields:

Visual Perception

Neural networks are visual masters, capable of recognizing faces, objects, and even distinguishing individuals in a crowd. Imagine feeding them a picture of your pet – they can tell your fluffy feline from your loyal canine companion with astounding accuracy!

Language Processing

From scribbles to speech, neural networks bridge the communication gap. They convert handwritten notes into digital text, transcribe your spoken words like magic, and even translate languages with impressive fluency. No more deciphering messy handwriting or struggling to order food in a foreign land!

Financial Analysis

Neural networks are financial wizards, predicting loan risks, valuing real estate, and even forecasting stock prices. They help banks make informed lending decisions, investors navigate the market with confidence, and businesses optimize their financial strategies.

Medicine

In the realm of healthcare, neural networks become life-saving heroes. They assist doctors in detecting lung nodules and heart arrhythmias, predict adverse drug reactions, and personalize treatment plans. Their keen eyes and swift analyses can make a world of difference in diagnosing illnesses and saving lives.

Telecommunications

Smooth connections and crystal-clear calls are powered by neural networks. They manage complex phone networks, ensuring seamless communication, and even cancel out pesky echoes on satellite links. Thanks to these silent guardians, your calls stay uninterrupted and crystal clear, no matter the distance.

These are just a few examples of the incredible versatility of neural networks. As research progresses, their applications are set to expand even further, revolutionizing industries, enhancing our lives, and pushing the boundaries of what machines can learn and achieve.

Nouvelle AI

Move over, human-level AI: Nouvelle AI throws the rulebook away and charts a thrilling new course in robot intelligence. Pioneered by Rodney Brooks at MIT, this unconventional approach ditches the lofty goal of replicating human minds and sets its sights on something smaller, yet arguably more attainable: insect-level intelligence.

Breaking Free from Symbolic Shackles

Unlike its symbol-obsessed predecessors, Nouvelle AI rejects the notion of building elaborate internal models of reality. These models, like giant mental maps, often suffer from the frame problem: the ever-growing burden of updating every possible change in the real world within the model. Nouvelle AI takes a refreshingly pragmatic stance: true intelligence lies in adapting and interacting with the real world itself.

From Simple Behaviors Emerge Complex Actions

Imagine a robot programmed with just two basic behaviors: avoiding obstacles and following moving objects. This seemingly simple combination can result in surprising complexity. The robot might “stalk” a moving object, cautiously approaching and stopping when too close. This emergent behavior, where sophisticated actions arise from simpler building blocks, is the beating heart of Nouvelle AI.

Herbert the Can Collector

Meet Herbert, Brooks’s robotic pioneer, roaming the bustling MIT labs. Fueled by around 15 basic behaviors, Herbert tirelessly searched for empty soda cans, picked them up, and diligently carried them away. His seemingly purposeful actions weren’t driven by an internal map, but by continuous interaction with his environment – “reading off” the world just when needed.

Outsmarting the Frame Problem

While traditional AI struggles to keep its internal models up-to-date, Nouvelle AI sidesteps this challenge altogether. The world itself becomes the source of information, accessed dynamically through sensors – like eyes and touch sensors – instead of being stored in a static internal model. This elegant solution minimizes the need for constant updates, keeping the system nimble and efficient.

Nouvelle AI – Learning Like Bugs, Living in the Real World

Forget disembodied minds in silicon towers! Nouvelle AI throws open the doors, letting robots interact and learn directly in the messy, unpredictable world. This “situated approach” paves a new path for robot intelligence, inspired by the elegant simplicity of insects.

Turing’s Prophetic Vision

Even back in 1948, Alan Turing saw the potential. He envisioned teaching robots like children, “with the best sense organs,” to understand and speak our language. This, he argued, was far superior to the chess-playing abstraction dominating early AI.

Dreyfus, the Heretic Who Became Hero

While many dismissed him as a heretic, philosopher Bert Dreyfus challenged the symbolic AI dogma. He insisted on the importance of a physical body, interacting with the world and its “tangible objects.” Today, Dreyfus is hailed as a visionary who paved the way for the situated approach.

Simple Bugs, Complex Actions

Don’t underestimate the power of small steps. Nouvelle AI builds complex behaviors from simple interactions. Imagine a robot navigating a room, avoiding obstacles and following sounds. This seemingly basic combination can lead to surprisingly sophisticated movements, mimicking the adaptability of insects.

Critics and Caution

While Nouvelle AI holds immense promise, challenges remain. Critics point out the lack of true insect-like complexity in current systems, and over-hyped promises of consciousness and language have dampened enthusiasm.

The Road Ahead

Despite the challenges, the situated approach offers a fresh perspective on AI. By embracing the real world and learning through interaction, Nouvelle AI paves the way for robots that are not just smart, but adaptable and truly alive in our world.

AI in the 21st Century

The 21st century has witnessed an AI revolution, fueled by exploding processing power (Moore’s Law) and vast datasets (“big data”). From humble chatbots to game-dominating champions, AI has stepped out of the computer lab and into the real world, leaving a trail of remarkable achievements.

Moore’s Law

At the heart of the AI boom lies Moore’s Law, which states that computing power doubles roughly every 18 months. This incredible growth in processing speed has provided the foundation for training increasingly complex neural networks, the workhorses of modern AI.

Big Data

AI thrives on data, and the 21st century has ushered in a world awash in it. From social media posts to sensor readings, massive datasets are now available in almost every imaginable domain. This abundance of data fuels the learning process of AI models, allowing them to identify patterns and make accurate predictions.

Deep Learning – Unleashing Neural Network Power

The year 2006 marked a turning point with the “greedy layer-wise pretraining” technique. This breakthrough made training complex neural networks (multi-layered structures mimicking the brain) with millions of parameters feasible. Thus, deep learning was born, empowering neural networks with 4 or more layers and the ability to learn features from data without explicit instructions (unsupervised learning).

Image Recognition Champs – From Pixels to Cats and Apples

Convolutional neural networks (CNNs) are deep learning masters of image classification. Trained on massive datasets of pictures, they learn to identify characteristics within images and categorize them. One such network, PReLU-net, even outperformed human experts in image classification!

Game On – AI Conquers Go and Beyond

Deep Blue’s chess victory over Garry Kasparov was impressive, but DeepMind’s AlphaGo took things to another level. AlphaGo mastered go, a vastly more complex game than chess, by learning from human players and self-play. It went on to crush top go player Lee Sedol 4-1 in 2016. But the story doesn’t end there. AlphaGo Zero, starting from scratch with only the go rules, surpassed its predecessor, achieving a 100-0 victory against AlphaGo! AlphaZero’s learning prowess wasn’t limited to go; it mastered chess and shogi with the same technique.

The Future of AI

The achievements of AI in the 21st century are just the tip of the iceberg. As processing power continues to increase and data becomes even more readily available, we can expect AI to expand its reach into every facet of our lives. From medicine and transportation to education and entertainment, AI is poised to shape the future in ways we can only begin to imagine.

The driverless dream – can AI steer us to Autonomous Nirvana?

Buckle up, because we’re taking a spin on the cutting edge of technology – autonomous vehicles (AVs)! Machine learning and AI are taking the wheel, promising a future where cars drive themselves and accidents become road rage relics. But is this dream ready for the real world, or are we still stuck in traffic jams of challenges?

Learning on the Fly – How AI Navigates the Road

Imagine your car training like a champion athlete, constantly learning from the data it gulps down – traffic patterns, weather conditions, even the wiggles of pedestrians. That’s machine learning in action, powering AVs to improve their algorithms and conquer the intricacies of the road.

AI Takes the Wheel – But Does it Know the Rules?

Now, picture your car making split-second decisions without a human peep. That’s the magic of AI, guiding AVs through tricky situations without pre-programmed instructions for every possible scenario. But how do we ensure these decisions don’t land us in a ditch?

Testing in the Shadows – Black-Box Safety Checks

Forget white-box testing, where everything’s open and transparent. AVs undergo rigorous black-box testing, where AI’s inner workings remain a mystery. Think of it as a supervillain trying to break your car’s defenses – only this villain works for your safety! By throwing unexpected curveballs, these tests reveal hidden weaknesses and ensure AVs meet the highest safety standards.

The Road Ahead – Bumps on the Journey to Utopia

Hold your horses, because the autonomous revolution isn’t here yet. Mapping millions of miles, dealing with unpredictable human drivers, and mastering “common sense” interactions are just some hurdles these AI brains need to leap. Tesla’s recent self-driving mishaps serve as a cautionary tale, reminding us that AI hasn’t quite mastered the complex social dance of the road.

Revolutionizing Language Understanding NLP

Natural Language Processing (NLP) is a dynamic field aiming to teach computers to comprehend and process language, mirroring human capabilities. Early attempts involved rule-based coding, but the journey towards sophisticated language understanding has evolved significantly.

Progressing from Hand-Coding to Statistical NLP

In its infancy, NLP models were hand-coded, lacking the finesse to handle language nuances and exceptions. The advent of Statistical NLP marked a shift, utilizing probability to attribute meanings to different textual components. This statistical approach, however, had its limitations.

The Rise of Modern NLP – Deep Learning and Language Models

Modern NLP systems leverage deep-learning models, merging AI, and statistical insights. GPT-3, a groundbreaking language model by OpenAI, showcased the power of AI in predicting sentence structures and solving complex problems, setting the stage for transformative applications.

GPT-3 and the Emergence of ChatGPT

GPT-3, a pioneer among large language models, was the driving force behind ChatGPT’s release in November 2022. ChatGPT’s uncanny ability to mimic human writing stirred discussions about the challenges of distinguishing between human-generated and AI-generated content.

NLP Applications Beyond Text – Voice Commands, Customer Service, and More

Beyond text-based applications, NLP permeates voice-operated GPS systems, customer service chatbots, and language translation programs. Businesses leverage NLP to enhance consumer interactions, auto-complete search queries, and monitor social media.

Bridging Text and Images – NLP in Image Generation

Innovative programs like OpenAI’s DALL-E, Stable Diffusion, and Midjourney use NLP to generate images based on textual prompts. Trained on extensive datasets, these programs seamlessly translate textual descriptions into captivating visual content.

Unveiling Challenges – Bias in NLP

While NLP unlocks unprecedented possibilities, it grapples with challenges. Machine-learning algorithms often reflect biases inherent in their training data. Instances of gender bias, as seen in descriptions of professions, underscore the need for careful consideration in NLP applications.

Real-World Impact – NLP Biases and Consequences

NLP biases, when unchecked, can lead to tangible consequences. The infamous 2015 case of Amazon’s résumé screening NLP program revealed gender discrimination, emphasizing the critical role of ethical development and oversight in NLP technologies.

Embracing the potential of NLP requires a nuanced understanding of its evolution, applications, and the imperative of addressing biases for a more equitable technological landscape.

Virtual Assistants – More Than Just Smart Speakers

The Evolution of Virtual Assistants

Virtual Assistants (VAs) have transcended mere voice-activated devices, now serving a myriad of functions, from scheduling tasks to guiding users on the road. The journey began in the 1960s with Eliza, but it wasn’t until the early 1990s that IBM’s Simon introduced the concept of a true VA. Today, household names like Amazon’s Alexa, Google’s G-Assistant, Microsoft’s Cortana, and Apple’s Siri dominate the market.

Personalized AI – Beyond Chatbots

Distinguishing themselves from chatbots, VAs bring a personalized touch to human-machine communication. Adapting to individual user behavior and continuously learning from interactions, they aim to predict and cater to user needs over time.

The Mechanics of Voice Assistants

At the core of voice assistants is the ability to parse human speech, achieved through automatic speech recognition (ASR) systems. Breaking down speech into phonemes, VAs analyze tones and other voice aspects to recognize users. Machine learning enhances their sophistication, with access to vast language databases and internet resources to provide comprehensive and accurate responses.

Siri’s Milestone – Bringing VA to Smartphones

In 2010, Siri marked a significant milestone as the first VA available for download to smartphones. This breakthrough opened new avenues for on-the-go assistance and set the stage for the widespread integration of VAs into daily life.

Navigating Risks in the AI Landscape

Socioeconomic Impacts – A Job Landscape in Flux

As AI automates various tasks, concerns about job displacement arise, particularly in industries like marketing and healthcare. While AI may usher in new job opportunities, the prerequisite technical skills may pose challenges for displaced workers.

Bias in AI – Unraveling Ethical Dilemmas

The inherent biases in AI systems, exemplified by predictive policing algorithms, raise ethical concerns. Reflecting human biases, these algorithms risk perpetuating and exacerbating societal disparities, necessitating rigorous training and oversight to rectify these issues.

Privacy Challenges – Balancing Innovation and Security

AI’s hunger for data raises privacy concerns, with the potential for unauthorized access and misuse. Generative AI adds another layer of complexity, enabling the manipulation of images and the creation of fake profiles. Policymakers face the daunting task of striking a balance that maximizes AI benefits while safeguarding against potential risks.

As we continue to embrace the era of virtual assistants, it is imperative to navigate these challenges, fostering a future where AI enhances lives without compromising ethics, privacy, or socioeconomic balance.

Quest for Artificial General Intelligence (AGI)

Applied AI

As we witness the continuous triumphs of applied AI and cognitive simulation, the elusive realm of artificial general intelligence (AGI) raises profound questions. While applied AI flourishes, the quest for AGI, aiming to replicate human intellectual prowess, remains both controversial and elusive.

The Hurdles and Controversies Surrounding AGI

Exaggerated claims of success, both in professional journals and the popular press, have cast a shadow over AGI. The current challenge is apparent—achieving an embodied system matching the intelligence of even a cockroach poses difficulties, let alone rivaling human capabilities.

Scaling AI’s Modest Achievements – A Daunting Task

Scaling up AI’s achievements proves to be a formidable hurdle. Symbolic AI’s decades of research, connectionists’ struggles with modeling even basic nervous systems, and the skepticism toward nouvelle AI highlight the complexity of the journey. Critics remain unconvinced that high-level behaviors akin to human intelligence can emerge from fundamental behaviors like obstacle avoidance and object manipulation.

The Essential Question – Can Computers Truly Think?

Delving into the heart of AGI, the fundamental question arises—can a computer genuinely think? Noam Chomsky questions the very nature of the debate, asserting the arbitrariness of extending the term “think” to machines. Yet, the central inquiry persists: under what conditions can a computer be aptly described as thinking?

The Turing Test Conundrum

Some propose the Turing test as an intelligence benchmark, but Turing himself acknowledged its limitations. The test’s failure to account for an entity explicitly invoking its nature, like ChatGPT, calls into question its role as an intelligence definition. Claude Shannon and John McCarthy’s argument adds complexity, suggesting that a machine could pass by relying on pre-programmed responses.

The Elusive Definition of Intelligence in AI

AI grapples with the absence of a concrete definition of intelligence, even in subhuman contexts. Without a precise criterion for artificial intelligence to reach a certain level, the success or failure of an AI research program becomes subjective. Marvin Minsky’s perspective, likening intelligence to unexplored regions, prompts us to consider it as a label for problem-solving processes yet to be fully understood.

In the quest for AGI, navigating these challenges requires a nuanced understanding of intelligence, moving beyond the triumphs of applied AI to unlock the true potential of artificial general intelligence.